1. What is the problem?

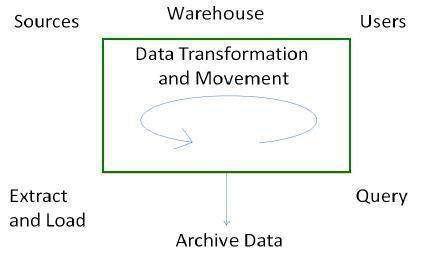

Data warehouses are optimized for read operations (often as read-only), but this implies that the user is not working with the most recent information. As most enterprise data warehouses operate around the clock, concurrent online updates are desired – but since updates are expensive, they can consume resources in a way that often reduces query performance.

2. Why is it important?

Users value uptime and currency of their data warehouse – as well as performance. While these attributes are not mutually exclusive, they cannot receive equal priority. Given certain strategies and algorithms that take advantage of modern hardware, it has become possible to get more of each – which will allow a data-driven enterprise to become more competitive in their execution. When considering large data flow of modern internet-based applications and internet-of-things – as well as the desire for machine learning and business intelligence – the needs of modern business have grown and so the ability for database systems to perform must grow also.

3. Why is it hard?

Utilization of memory means that anything that has been materialized or staged will be lost in the event of a crash or power failure – also use of memory reduces query performance. The alternative storage would be the persistent disk (which is recoverable where memory is not), but in the extract transform load (ETL) operation, fast execution is desired. SSDs may provide acceptable performance over HDDs, but SSDs wear out (which could cause downtime) with excessive modification – therefore some form of memory buffer should be used. Also, SSDs require an entire page to be rewritten for a single update – therefore updates should be minimized.

The design goals of MaSM include low query overhead with a small memory footprint, no random SSD writes, low total SSD writes, efficient migration, and ACID compliance – while still returning correct results. MaSM takes a database system from being completely read-optimized, to having realistic write performance for modern applications.

4. Why existing solutions do not work?

The previous solution was to update the data warehouse during a down time. However, this doesn’t work when there is no idle or down time. The other problem with this approach is that users do not want to wait for the data to be updated – and have less tolerance for working with out-of-date data. When performing in-place updates, it can slow the database by 2.2x-2.6x (95 percent interval) without outliers taking far longer. While almost half of this slow-down is from disk transaction patterns, mixed transactions without caching unacceptably reduces database performance. Caching the updates, while reducing the disk operations, has the disadvantage of consuming memory. An increase in memory consumption reduces the resources available for storing intermediate query results – so for previous solutions that worked by creating a copy of the migration in memory in order to make the new copy available, query performance suffered around 3.8x.

5. What is the core intuition for the solution?

Materialized Sort-Merge (MaSM) takes advantage of SSDs to cache incoming updates by using algorithms that lead to smaller memory footprints and lower overhead. This is based on a 2007 report by J. Becla and K.T. Lim which used a front-end OLTP system to construct the back-end OLAP data warehouse. Instead of just writing to a row store and reading from a column store, MaSM attempts to remove much of the memory load by materializing the column-oriented differential data on SSD. These well-formed updates take fewer resources to load into the main OLAP data warehouse (where more intensive updates had an adverse effect on query time). MaSM uses a sort-based join. Sort-based and hash-based joins are known to be efficient, but hash-based joins perform more I/O while sort-based joins preserve record order to allow for merge operations to take place at a higher level. Finally, the authors vary the granularity of the run index (coarse granularity leads to lower memory footprint while fine granularity improves range scans). In practice this should be tuned to the ranges requested by the workload.

6. Does the paper prove its claims?

MaSM claims to use only 7% of the traditional overhead using both synthetic range scans and the TPC-H workloads. To demonstrate these claims, the authors test the TPC-H queries on both column-store and row-store DBMS implementations in a way that ensures the total data is much greater than the memory can hold. During this baseline, the authors record the I/O operations and show that concurrent query and update operations typical has the worst performance.

The authors develop proofs for major design decisions (choosing memory buffer page size, number of 1 pass runs to merge into 2 pass runs, average record write to SSD, etc.). The authors relate these decisions to a cohesive system that accomplishes the five design goals stated earlier. The authors also discuss the bounds of performance expectation and what drives the outcome of each performance attribute.

7. What is the setup of analysis/experiments? is it sufficient?

All experiments are performed on a 2.33Ghz quad core Xeon 5345 CPU with 4GB RAM. Since the goal is to have as low a memory footprint as possible, it makes sense to use this configuration – at least by today’s standards. The main data is stored on a 7200rpm HDD while the cached updates are stored on a 32GB SSD. 100GB of synthetic data with 100byte records was used to generate random updates. In this approach, MaSM was shown to use 16MB memory and 4GB SSD space, given a page size of 64KB on the SSD. Using the TPC-H benchmark and 30GB of data, 4GB SSD space was also sufficient – however two TPC-H queries did not finish..

8. Are there any gaps in the logic/proof?

Aside from the fact that TPC-H failed to complete queries 17 and 20 (which is not investigated), the authors do not normalize the results of the TPC-H replay like the analysis of the synthetic results. This would be more meaningful because TPC-H queries are intended to vary in execution time. In this experiment, workload is not the independent variable, but query update handling is.

In various database systems, indexes are wrapped in a structure such as a b-+tree. Incorporating the differential update data into the main data will require this structure to be updated, which doesn’t seem to be discussed in detail.

9. Describe at least one possible next step.

There are efficient structures that can be used to merge data (example LSM trees) that would be a very good next step for this analysis. This will help integrated into indexed data – not just column stores. It is shown that MaSM has lowered memory footprint, and therefore improved query performance, so another step would be to try employ this principle on a distributed system. In many modern enterprise data systems, the size of the data exceeds that which can fit on a single node.

BibTex Citation

@inproceedings{athanassoulis2011masm,

title={MaSM: efficient online updates in data warehouses},

author={Athanassoulis, Manos and Chen, Shimin and Ailamaki, Anastasia and Gibbons, Phillip B and Stoica, Radu},

booktitle={Proceedings of the 2011 ACM SIGMOD International Conference on Management of data},

pages={865--876},

year={2011},

organization={ACM}

}