We were implementing a message queue (maybe to get away from ActiveMQ or SignalR) when it dawned on us that C#'s Queue<T>.Dequeue() method throws an exception when there is nothing in the queue.

In the initial Pull Request to fix the bug, a try-catch was put around Dequeue(), but that means using the interrupt pattern...

The interrupt pattern is often viewed as being slower, so the goal would be write an implementation to avoid raising an exception or handling one.

Basically, this method would Try to Dequeue and return false if it could not.

Let's try a few approaches to the TryDequeue implementation:

namespace System.Collections.Generic

{

/// <summary>

/// Extensions on Queue

/// </summary>

public static class QueueExtensions

{

/// <summary>

/// Performs a Dequeue first checking count

/// </summary>

/// <typeparam name="T">The type</typeparam>

/// <param name="queue">The Queue</param>

/// <param name="result">The result</param>

/// <returns>True if result found</returns>

public static bool TryDequeueCount<T>(this Queue<T> queue, out T result)

{

if ((queue?.Count ?? 0) > 0)

{

result = queue.Dequeue();

return true;

}

result = default;

return false;

}

/// <summary>

/// Performs a Dequeue using the enumerator to check if possible

/// </summary>

/// <typeparam name="T">The type</typeparam>

/// <param name="queue">The Queue</param>

/// <param name="result">The result</param>

/// <returns>True if result found</returns>

public static bool TryDequeueEnumerator<T>(this Queue<T> queue, out T result)

{

if (queue?.GetEnumerator().MoveNext() == true)

{

result = queue.Dequeue();

return true;

}

result = default;

return false;

}

/// <summary>

/// Performs a Dequeue catching any exceptions

/// </summary>

/// <typeparam name="T">The type</typeparam>

/// <param name="queue">The Queue</param>

/// <param name="result">The result</param>

/// <returns>True if result found</returns>

public static bool TryDequeueThrow<T>(this Queue<T> queue, out T result)

{

try

{

result = queue.Dequeue();

return true;

}

catch

{

// Ignored

}

result = default;

return false;

}

}

}

This API was based on the Queue<T>.TryDequeue(out T result) method as suggested by a colleague.

This sounded like a great approach except we'd have to change our NetStandard target.

But maybe it offered a performance advantage?

Let's target the right NetStandard and write some test code...

class DequeueTest

{

/// <summary>

/// Makes a queue of messages

/// </summary>

/// <param name="numberOfQueues">The number of queues</param>

/// <param name="queueSize">The size of each queue</param>

/// <returns>A collection of queues for testing</returns>

public static Queue<string>[] MakeTestArray(int numberOfQueues = 100000, int queueSize = 5)

{

var testArray = new Queue<string>[numberOfQueues];

for (int i = 0; i < numberOfQueues; i++)

{

var queue = new Queue<string>(queueSize);

for (int j = 0; j < queueSize; j++)

{

queue.Enqueue("tests");

}

testArray[i] = queue;

}

return testArray;

}

/// <summary>

/// Runs a test of each TryDequeue given a number of queues and queue size

/// </summary>

/// <param name="numberOfQueues">The number of queues</param>

/// <param name="queueSize">The size of each queue</param>

public static void Run(int numberOfQueues = 100000, int queueSize = 5)

{

var sw = new System.Diagnostics.Stopwatch();

var ta = MakeTestArray(numberOfQueues, queueSize);

sw.Start();

for (int i = 0; i < numberOfQueues; i++)

{

var current = ta[i];

}

sw.Stop();

Console.Write(sw.Elapsed.Ticks + ",\t");

sw.Restart();

for (int i = 0; i < numberOfQueues; i++)

{

var current = ta[i];

while (current.TryDequeue(out string _)) { }

}

sw.Stop();

Console.Write(sw.Elapsed.Ticks + ",\t");

ta = MakeTestArray(numberOfQueues, queueSize);

sw.Restart();

for (int i = 0; i < numberOfQueues; i++)

{

var current = ta[i];

while (current.TryDequeueCount(out string _)) { }

}

sw.Stop();

Console.Write(sw.Elapsed.Ticks + ",\t");

ta = MakeTestArray(numberOfQueues, queueSize);

sw.Restart();

for (int i = 0; i < numberOfQueues; i++)

{

var current = ta[i];

while (current.TryDequeueEnumerator(out string _)) { }

}

sw.Stop();

Console.Write(sw.Elapsed.Ticks + ",\t");

ta = MakeTestArray(numberOfQueues, queueSize);

sw.Restart();

for (int i = 0; i < numberOfQueues; i++)

{

var current = ta[i];

while (current.TryDequeueThrow(out string _)) { }

}

sw.Stop();

Console.WriteLine(sw.Elapsed.Ticks);

}

}

Here is the average of 30 runs (all times in Ticks):

Average Time (in ticks) to Dequeue 100k Queues

| Items in Queue | Time to Traverse Training Data | Framework Implementation | Count Implementation | MoveNext Implementation | Interrupt Implementation |

|---|---|---|---|---|---|

| 1 | 85 | 1223 | 769 | 4250 | 2764586 |

| 2 | 89 | 2266 | 1355 | 7111 | 2900740 |

| 4 | 89 | 4182 | 2741 | 12683 | 2566929 |

| 8 | 85 | 7727 | 4964 | 25196 | 3890715 |

| 16 | 89 | 15792 | 8899 | 46304 | 1961727 |

| 32 | 85 | 29724 | 18785 | 89751 | 1975518 |

| 64 | 85 | 58004 | 36481 | 177254 | 1974013 |

| 128 | 89 | 114340 | 71530 | 351468 | 2526171 |

| 256 | 89 | 226954 | 142646 | 700127 | 2605587 |

| 512 | 85 | 453322 | 282012 | 1402411 | 2541266 |

| 1024 | 89 | 968186 | 563182 | 2828222 | 3158801 |

| 2048 | 89 | 1807073 | 1122391 | 5685464 | 4259148 |

| 4096 | 85 | 3695966 | 2261828 | 11236762 | 6901183 |

| 8192 | 89 | 7425176 | 4524679 | 22414515 | 11408915 |

| 16384 | 89 | 14570487 | 9608051 | 45315573 | 21544792 |

| 32768 | 98 | 29357790 | 18174311 | 91511936 | 39762457 |

| 65536 | 119 | 62032218 | 36157367 | 183406812 | 83570126 |

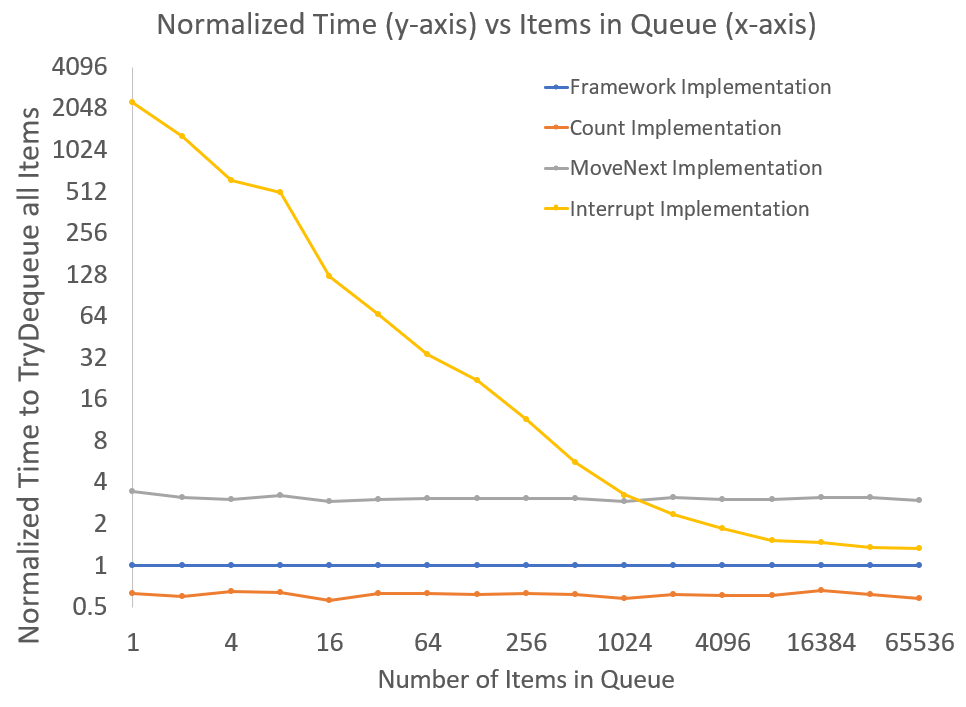

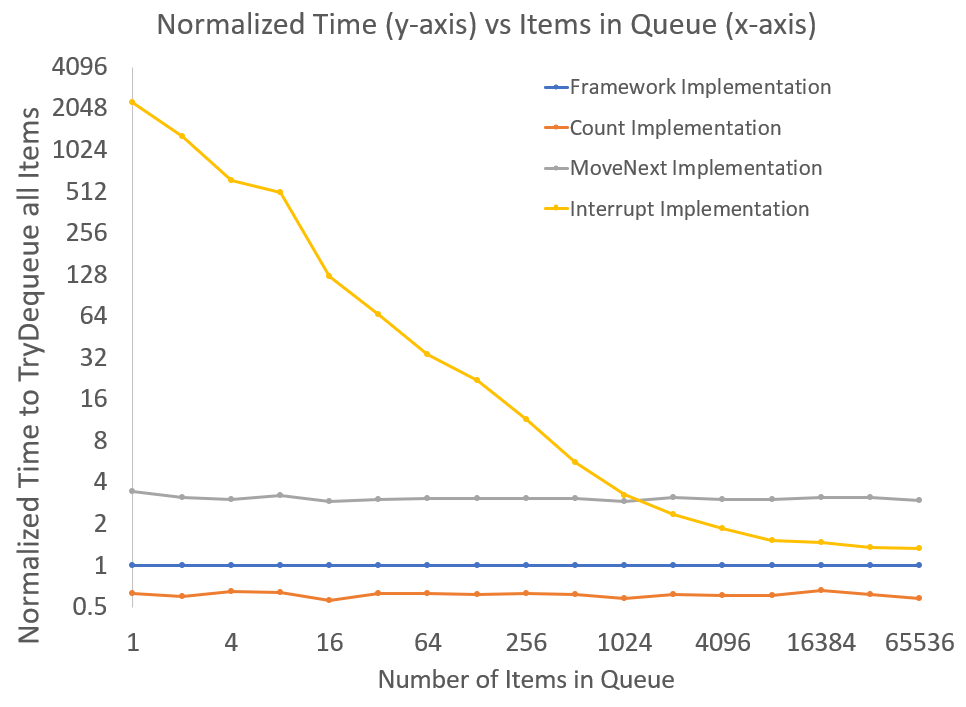

Let's normalize the times to the results of the framework implementation, shall we?

Normalized Average Time (with respect to Framework results)

| Items in Queue | Framework Implementation | Count Implementation | MoveNext Implementation | Interrupt Implementation |

|---|---|---|---|---|

| 1 | 1 | 0.63 | 3.48 | 2260.5 |

| 2 | 1 | 0.6 | 3.14 | 1280.11 |

| 4 | 1 | 0.66 | 3.03 | 613.8 |

| 8 | 1 | 0.64 | 3.26 | 503.52 |

| 16 | 1 | 0.56 | 2.93 | 124.22 |

| 32 | 1 | 0.63 | 3.02 | 66.46 |

| 64 | 1 | 0.63 | 3.06 | 34.03 |

| 128 | 1 | 0.63 | 3.07 | 22.09 |

| 256 | 1 | 0.63 | 3.08 | 11.48 |

| 512 | 1 | 0.62 | 3.09 | 5.61 |

| 1024 | 1 | 0.58 | 2.92 | 3.26 |

| 2048 | 1 | 0.62 | 3.15 | 2.36 |

| 4096 | 1 | 0.61 | 3.04 | 1.87 |

| 8192 | 1 | 0.61 | 3.02 | 1.54 |

| 16384 | 1 | 0.66 | 3.11 | 1.48 |

| 32768 | 1 | 0.62 | 3.12 | 1.35 |

| 65536 | 1 | 0.58 | 2.96 | 1.35 |

Visually, this might make more sense on a log2 - log2 plot...

These results are somewhat interesting.

First of all, since an exception is only raised when the Queue can no longer Dequeue, the interrupt pattern approaches framework times as the number of items in the queue increases.

Of course, for sizes less than 2^10 it is still the least performant.

We expect using MoveNext to be slower than framework times... 4x seems reasonable.

The other interesting finding is that the Count implementation of TryDequeue is consistently faster than framework.

How is that possible?

All Count does is return _size of the Queue.

Let's look at the source code...

// Dequeue implementation

public T Dequeue()

{

int head = _head;

T[] array = _array;

if (_size == 0)

{

ThrowForEmptyQueue();

}

T result = array[head];

if (RuntimeHelpers.IsReferenceOrContainsReferences<T>())

{

array[head] = default(T);

}

MoveNext(ref _head);

_size--;

_version++;

return result;

}

// TryDequeue implementation

public bool TryDequeue([MaybeNullWhen(false)] out T result)

{

int head = _head;

T[] array = _array;

if (_size == 0)

{

result = default(T);

return false;

}

result = array[head];

if (RuntimeHelpers.IsReferenceOrContainsReferences<T>())

{

array[head] = default(T);

}

MoveNext(ref _head);

_size--;

_version++;

return true;

}

Really there is no difference to explain the ~35% improvement of speed for the Count implementation.

Maybe this is one of the many times we have found that static methods are faster than instance methods.